- h

FLAIR-1-2

- huggingface.co

Updated Jun 4, 2025 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteInstitut national de l'information géographique et forestière (2025). FLAIR-1-2 [Dataset]. https://huggingface.co/datasets/IGNF/FLAIR-1-2Dataset updatedJun 4, 2025Dataset authored and provided byInstitut national de l'information géographique et forestièreLicense

CiteInstitut national de l'information géographique et forestière (2025). FLAIR-1-2 [Dataset]. https://huggingface.co/datasets/IGNF/FLAIR-1-2Dataset updatedJun 4, 2025Dataset authored and provided byInstitut national de l'information géographique et forestièreLicensehttps://choosealicense.com/licenses/etalab-2.0/https://choosealicense.com/licenses/etalab-2.0/

DescriptionDataset Card for FLAIR land-cover semantic segmentation

Context & DataThe hereby FLAIR (#1 and #2) dataset is sampled countrywide and is composed of over 20 billion annotated pixels of very high resolution aerial imagery at 0.2 m spatial resolution, acquired over three years and different months (spatio-temporal domains). Aerial imagery patches consist of 5 channels (RVB-Near Infrared-Elevation) and have corresponding annotation (with 19 semantic classes or 13 for the… See the full description on the dataset page: https://huggingface.co/datasets/IGNF/FLAIR-1-2.

- h

FLAIR-HUB

- huggingface.co

Updated Jun 4, 2025 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteInstitut national de l'information géographique et forestière (2025). FLAIR-HUB [Dataset]. https://huggingface.co/datasets/IGNF/FLAIR-HUBDataset updatedJun 4, 2025Dataset authored and provided byInstitut national de l'information géographique et forestièreLicense

CiteInstitut national de l'information géographique et forestière (2025). FLAIR-HUB [Dataset]. https://huggingface.co/datasets/IGNF/FLAIR-HUBDataset updatedJun 4, 2025Dataset authored and provided byInstitut national de l'information géographique et forestièreLicensehttps://choosealicense.com/licenses/etalab-2.0/https://choosealicense.com/licenses/etalab-2.0/

DescriptionFLAIR-HUB : Large-scale Multimodal Dataset for Land Cover and Crop Mapping

FLAIR-HUB builds upon and includes the FLAIR#1 and FLAIR#2 datasets, expanding them into a unified, large-scale, multi-sensor land-cover resource with very-high-resolution annotations. Spanning over 2,500 km² of diverse French ecoclimates and landscapes, it features 63 billion hand-annotated pixels across 19 land-cover and 23 crop type classes. The dataset integrates complementary data sources including… See the full description on the dataset page: https://huggingface.co/datasets/IGNF/FLAIR-HUB.

- f

FLAIR isoforms | cDNA 1

- figshare.com

txtUpdated May 31, 2021 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteIvan de la Rubia (2021). FLAIR isoforms | cDNA 1 [Dataset]. http://doi.org/10.6084/m9.figshare.14578854.v1txtAvailable download formatsUnique identifierhttps://doi.org/10.6084/m9.figshare.14578854.v1Dataset updatedMay 31, 2021Dataset provided byfigshareAuthorsIvan de la RubiaLicense

CiteIvan de la Rubia (2021). FLAIR isoforms | cDNA 1 [Dataset]. http://doi.org/10.6084/m9.figshare.14578854.v1txtAvailable download formatsUnique identifierhttps://doi.org/10.6084/m9.figshare.14578854.v1Dataset updatedMay 31, 2021Dataset provided byfigshareAuthorsIvan de la RubiaLicensehttps://www.gnu.org/licenses/gpl-3.0.htmlhttps://www.gnu.org/licenses/gpl-3.0.html

DescriptionFLAIR results on RATTLE paper data

- h

FLAIR1_mmseg

- huggingface.co

Updated May 28, 2025 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteHEIG-Vd Geomatic (2025). FLAIR1_mmseg [Dataset]. https://huggingface.co/datasets/heig-vd-geo/FLAIR1_mmsegDataset updatedMay 28, 2025Dataset authored and provided byHEIG-Vd GeomaticLicense

CiteHEIG-Vd Geomatic (2025). FLAIR1_mmseg [Dataset]. https://huggingface.co/datasets/heig-vd-geo/FLAIR1_mmsegDataset updatedMay 28, 2025Dataset authored and provided byHEIG-Vd GeomaticLicenseApache License, v2.0https://www.apache.org/licenses/LICENSE-2.0

License information was derived automaticallyDescriptionFLAIR#1 Dataset for MMSegmentation

This repository provides the Official FLAIR#1 dataset, formatted for compatibility with the MMSegmentation framework. The dataset is structured to maintain the original folder hierarchy as required by MMSegmentation.

StructureEnsure that the dataset is organized as follows after extraction: flair/ ├── images/ │ ├── train/ │ ├── images/ │ └── masks/ │ ├── val/ │ ├── images/ │ └── masks/ │ └── test/ │ ├──… See the full description on the dataset page: https://huggingface.co/datasets/heig-vd-geo/FLAIR1_mmseg.

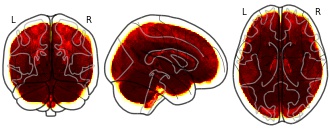

pvs_shiva_t1_flair_1.h5

- figshare.com

hdfUpdated Aug 20, 2024+ more versions Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteNick Tustison (2024). pvs_shiva_t1_flair_1.h5 [Dataset]. http://doi.org/10.6084/m9.figshare.26785147.v1hdfAvailable download formatsUnique identifierhttps://doi.org/10.6084/m9.figshare.26785147.v1Dataset updatedAug 20, 2024AuthorsNick TustisonLicense

CiteNick Tustison (2024). pvs_shiva_t1_flair_1.h5 [Dataset]. http://doi.org/10.6084/m9.figshare.26785147.v1hdfAvailable download formatsUnique identifierhttps://doi.org/10.6084/m9.figshare.26785147.v1Dataset updatedAug 20, 2024AuthorsNick TustisonLicenseAttribution 4.0 (CC BY 4.0)https://creativecommons.org/licenses/by/4.0/

License information was derived automaticallyDescriptionpvs shiva t1 flair 1

Reddit AskScience Flair Analysis Dataset

- kaggle.com

Updated Feb 15, 2025 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteSumit Mishra (2025). Reddit AskScience Flair Analysis Dataset [Dataset]. https://www.kaggle.com/datasets/sumitm004/reddit-raskscience-flair-datasetCroissantCroissant is a format for machine-learning datasets. Learn more about this at mlcommons.org/croissant.Dataset updatedFeb 15, 2025AuthorsSumit MishraLicense

CiteSumit Mishra (2025). Reddit AskScience Flair Analysis Dataset [Dataset]. https://www.kaggle.com/datasets/sumitm004/reddit-raskscience-flair-datasetCroissantCroissant is a format for machine-learning datasets. Learn more about this at mlcommons.org/croissant.Dataset updatedFeb 15, 2025AuthorsSumit MishraLicenseOpen Data Commons Attribution License (ODC-By) v1.0https://www.opendatacommons.org/licenses/by/1.0/

License information was derived automaticallyDescriptionContext

Reddit is a massive platform for news, content, and discussions, hosting millions of active users daily. Among its vast number of subreddits, we focus on the r/AskScience community, where users engage in science-related discussions and questions.

Content

This dataset is derived from the r/AskScience subreddit, collected between January 1, 2016, and May 20, 2022. It includes 612,668 datapoints across 22 columns, featuring diverse information such as the content of the questions, submission descriptions, associated flairs, NSFW/SFW status, year of submission, and more. The data was extracted using Python and Pushshift's API, followed by some cleaning with NumPy and pandas. Detailed column descriptions are available for clarity.

Ideas for Usage

- Flair Prediction:Train models to predict post flairs (e.g., 'Science', 'Ask', 'Discussion') to automate content categorization for platforms like Reddit.

- NSFW Classification: Classify posts as SFW or NSFW based on textual content, enabling content moderation tools for online forums.

- Text Mining / NLP Tasks: Apply NLP techniques like Sentiment Analysis, Topic Modeling, and Text Classification to explore the content and themes of science-related discussions.

- Community Engagement Analysis: Investigate which post types or flairs generate more engagement (e.g., upvotes or comments), offering insights into user interaction.

- Trend Detection in Science Topics: Identify emerging science topics and analyze shifts in interest areas, which can help predict future trends in scientific discussions.

- o

Flair Circle Cross Street Data in Houston, TX

- ownerly.com

Updated Jun 9, 2022 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteOwnerly (2022). Flair Circle Cross Street Data in Houston, TX [Dataset]. https://www.ownerly.com/tx/houston/flair-cir-home-detailsDataset updatedJun 9, 2022Dataset authored and provided byOwnerlyArea coveredTexas, Houston, Flair CircleDescription

CiteOwnerly (2022). Flair Circle Cross Street Data in Houston, TX [Dataset]. https://www.ownerly.com/tx/houston/flair-cir-home-detailsDataset updatedJun 9, 2022Dataset authored and provided byOwnerlyArea coveredTexas, Houston, Flair CircleDescriptionThis dataset provides information about the number of properties, residents, and average property values for Flair Circle cross streets in Houston, TX.

- h

FLAIR_1_osm_clip

- huggingface.co

Updated Aug 18, 2023 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteInstitut national de l'information géographique et forestière (2023). FLAIR_1_osm_clip [Dataset]. https://huggingface.co/datasets/IGNF/FLAIR_1_osm_clipCroissantCroissant is a format for machine-learning datasets. Learn more about this at mlcommons.org/croissant.Dataset updatedAug 18, 2023Dataset authored and provided byInstitut national de l'information géographique et forestièreDescription

CiteInstitut national de l'information géographique et forestière (2023). FLAIR_1_osm_clip [Dataset]. https://huggingface.co/datasets/IGNF/FLAIR_1_osm_clipCroissantCroissant is a format for machine-learning datasets. Learn more about this at mlcommons.org/croissant.Dataset updatedAug 18, 2023Dataset authored and provided byInstitut national de l'information géographique et forestièreDescriptionDataset Card for "FLAIR_OSM_CLIP"

Dataset for the Seg2Sat model: https://github.com/RubenGres/Seg2Sat Derived from FLAIR#1 train split. This dataset incudes the following features:

image: FLAIR#1 .tif files RBG bands converted into a more managable jpg format segmentation: FLAIR#1 segmentation converted to JPG using the LUT from the documentation metadata: OSM metadata for the centroid of the image clip_label: CLIP ViT-H description class_rep: ratio of appearance of each class in… See the full description on the dataset page: https://huggingface.co/datasets/IGNF/FLAIR_1_osm_clip.

- n

Pre-trained Flair and BERT weights (Spanish biomedical literature, SciELO)...

- narcis.nl

- data.mendeley.com

Updated Jul 23, 2020+ more versions Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteAkhtyamova, L (via Mendeley Data) (2020). Pre-trained Flair and BERT weights (Spanish biomedical literature, SciELO) and corpus [Dataset]. http://doi.org/10.17632/vf6jmvz83b.1Unique identifierhttps://doi.org/10.17632/vf6jmvz83b.1Dataset updatedJul 23, 2020Dataset provided byData Archiving and Networked Services (DANS)AuthorsAkhtyamova, L (via Mendeley Data)Description

CiteAkhtyamova, L (via Mendeley Data) (2020). Pre-trained Flair and BERT weights (Spanish biomedical literature, SciELO) and corpus [Dataset]. http://doi.org/10.17632/vf6jmvz83b.1Unique identifierhttps://doi.org/10.17632/vf6jmvz83b.1Dataset updatedJul 23, 2020Dataset provided byData Archiving and Networked Services (DANS)AuthorsAkhtyamova, L (via Mendeley Data)DescriptionThis zipped folder includes pre-trained weights of BERT and Flair models. Both of these models were trained from scratch on Spanish biomedical literature texts obtained from SciELO website. The corpus on which the models were trained is also included. It consists of over 86B tokens. More details on the corpus and training process could be found in our paper "Testing Contextualized Word Embeddings to Improve NER in Spanish Clinical Case Narratives", doi: 10.21203/rs.2.22697/v2

- t

Replication data for: big field of view mri t1w and flair template: nmri225...

- service.tib.eu

Updated May 16, 2025 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied Cite(2025). Replication data for: big field of view mri t1w and flair template: nmri225 - Vdataset - LDM [Dataset]. https://service.tib.eu/ldmservice/dataset/goe-doi-10-25625-swasihDataset updatedMay 16, 2025License

Cite(2025). Replication data for: big field of view mri t1w and flair template: nmri225 - Vdataset - LDM [Dataset]. https://service.tib.eu/ldmservice/dataset/goe-doi-10-25625-swasihDataset updatedMay 16, 2025LicenseCC0 1.0 Universal Public Domain Dedicationhttps://creativecommons.org/publicdomain/zero/1.0/

License information was derived automaticallyDescriptionThis repository includes the NMRI225 T1 and FLAIR templates in isotropic 0.5 mm and 1 mm resolutions.

- o

Flair Drive Cross Street Data in Montgomery, AL

- ownerly.com

Updated Dec 8, 2021 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteOwnerly (2021). Flair Drive Cross Street Data in Montgomery, AL [Dataset]. https://www.ownerly.com/al/montgomery/flair-dr-home-detailsDataset updatedDec 8, 2021Dataset authored and provided byOwnerlyArea coveredMontgomery, Alabama, Flair DriveDescription

CiteOwnerly (2021). Flair Drive Cross Street Data in Montgomery, AL [Dataset]. https://www.ownerly.com/al/montgomery/flair-dr-home-detailsDataset updatedDec 8, 2021Dataset authored and provided byOwnerlyArea coveredMontgomery, Alabama, Flair DriveDescriptionThis dataset provides information about the number of properties, residents, and average property values for Flair Drive cross streets in Montgomery, AL.

- f

Volumes and numbers of eVRS obtained on PSIR, T1 and FLAIR images.

- plos.figshare.com

xlsUpdated Jun 4, 2023 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteAlice Favaretto; Andrea Lazzarotto; Alice Riccardi; Stefano Pravato; Monica Margoni; Francesco Causin; Maria Giulia Anglani; Dario Seppi; Davide Poggiali; Paolo Gallo (2023). Volumes and numbers of eVRS obtained on PSIR, T1 and FLAIR images. [Dataset]. http://doi.org/10.1371/journal.pone.0185626.t002xlsAvailable download formatsUnique identifierhttps://doi.org/10.1371/journal.pone.0185626.t002Dataset updatedJun 4, 2023Dataset provided byPLOS ONEAuthorsAlice Favaretto; Andrea Lazzarotto; Alice Riccardi; Stefano Pravato; Monica Margoni; Francesco Causin; Maria Giulia Anglani; Dario Seppi; Davide Poggiali; Paolo GalloLicense

CiteAlice Favaretto; Andrea Lazzarotto; Alice Riccardi; Stefano Pravato; Monica Margoni; Francesco Causin; Maria Giulia Anglani; Dario Seppi; Davide Poggiali; Paolo Gallo (2023). Volumes and numbers of eVRS obtained on PSIR, T1 and FLAIR images. [Dataset]. http://doi.org/10.1371/journal.pone.0185626.t002xlsAvailable download formatsUnique identifierhttps://doi.org/10.1371/journal.pone.0185626.t002Dataset updatedJun 4, 2023Dataset provided byPLOS ONEAuthorsAlice Favaretto; Andrea Lazzarotto; Alice Riccardi; Stefano Pravato; Monica Margoni; Francesco Causin; Maria Giulia Anglani; Dario Seppi; Davide Poggiali; Paolo GalloLicenseAttribution 4.0 (CC BY 4.0)https://creativecommons.org/licenses/by/4.0/

License information was derived automaticallyDescriptionVolumes and numbers of eVRS obtained on PSIR, T1 and FLAIR images.

- o

Flair Drive Cross Street Data in Oklahoma City, OK

- ownerly.com

Updated Dec 9, 2021 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteOwnerly (2021). Flair Drive Cross Street Data in Oklahoma City, OK [Dataset]. https://www.ownerly.com/ok/oklahoma-city/flair-dr-home-detailsDataset updatedDec 9, 2021Dataset authored and provided byOwnerlyArea coveredOklahoma, Oklahoma City, Flair DriveDescription

CiteOwnerly (2021). Flair Drive Cross Street Data in Oklahoma City, OK [Dataset]. https://www.ownerly.com/ok/oklahoma-city/flair-dr-home-detailsDataset updatedDec 9, 2021Dataset authored and provided byOwnerlyArea coveredOklahoma, Oklahoma City, Flair DriveDescriptionThis dataset provides information about the number of properties, residents, and average property values for Flair Drive cross streets in Oklahoma City, OK.

- N

BRAHMA: Population specific T1, T2, and FLAIR weighted brain templates and...

- neurovault.org

Updated Jun 18, 2020+ more versions Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied Cite(2020). BRAHMA: Population specific T1, T2, and FLAIR weighted brain templates and their impact in structural and functional imaging studies: Tissue Probability Maps - (volume 1) [Dataset]. http://identifiers.org/neurovault.image:394141Unique identifierhttps://identifiers.org/neurovault.image:394141Dataset updatedJun 18, 2020License

Cite(2020). BRAHMA: Population specific T1, T2, and FLAIR weighted brain templates and their impact in structural and functional imaging studies: Tissue Probability Maps - (volume 1) [Dataset]. http://identifiers.org/neurovault.image:394141Unique identifierhttps://identifiers.org/neurovault.image:394141Dataset updatedJun 18, 2020LicenseCC0 1.0 Universal Public Domain Dedicationhttps://creativecommons.org/publicdomain/zero/1.0/

License information was derived automaticallyDescriptionCollection description

A dataset of high-resolution 3D T1, T2-weighted, and FLAIR images acquired from a group of 113 volunteers (M/F - 56/57, mean age-28.96 ± 7.80 years) are used to construct T1, T2-weighted, and FLAIR templates, collectively referred to as Indian Brain Template, "BRAHMA". Additional tissue probability maps and segmentation atlases, with additional labels for deep brain regions such as the Substantia Nigra are also generated from the T2-weighted and FLAIR templates.

Subject species

homo sapiens

Modality

Structural MRI

Analysis level

other

Cognitive paradigm (task)

None / Other

Map type

A

- R

3 Flare Dataset

- universe.roboflow.com

zipUpdated Mar 18, 2024 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied Cite54UVC (2024). 3 Flare Dataset [Dataset]. https://universe.roboflow.com/54uvc/3-flare/dataset/2zipAvailable download formatsDataset updatedMar 18, 2024Dataset authored and provided by54UVCLicense

Cite54UVC (2024). 3 Flare Dataset [Dataset]. https://universe.roboflow.com/54uvc/3-flare/dataset/2zipAvailable download formatsDataset updatedMar 18, 2024Dataset authored and provided by54UVCLicenseAttribution 4.0 (CC BY 4.0)https://creativecommons.org/licenses/by/4.0/

License information was derived automaticallyVariables measuredRed_flare Bounding BoxesDescription3 Flare

## Overview 3 Flare is a dataset for object detection tasks - it contains Red_flare annotations for 4,781 images. ## Getting Started You can download this dataset for use within your own projects, or fork it into a workspace on Roboflow to create your own model. ## License This dataset is available under the [CC BY 4.0 license](https://creativecommons.org/licenses/CC BY 4.0). - t

Replication data for: big field of view mri t1w and flair template: nmri225...

- service.tib.eu

Updated May 16, 2025 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied Cite(2025). Replication data for: big field of view mri t1w and flair template: nmri225 | iterations [Dataset]. https://service.tib.eu/ldmservice/dataset/goe-doi-10-25625-7euooiDataset updatedMay 16, 2025License

Cite(2025). Replication data for: big field of view mri t1w and flair template: nmri225 | iterations [Dataset]. https://service.tib.eu/ldmservice/dataset/goe-doi-10-25625-7euooiDataset updatedMay 16, 2025LicenseCC0 1.0 Universal Public Domain Dedicationhttps://creativecommons.org/publicdomain/zero/1.0/

License information was derived automaticallyDescriptionThis includes the templates after iterations 1 through 7.

- N

Towards the Interpretability of Deep Learning Models for Multi-modal...

- neurovault.org

niftiUpdated Jul 27, 2022+ more versions Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied Cite(2022). Towards the Interpretability of Deep Learning Models for Multi-modal Neuroimaging: Finding Structural Changes of the Ageing Brain: Difference relevance maps of T1-FLAIR [Dataset]. http://identifiers.org/neurovault.image:783828niftiAvailable download formatsUnique identifierhttps://identifiers.org/neurovault.image:783828Dataset updatedJul 27, 2022License

Cite(2022). Towards the Interpretability of Deep Learning Models for Multi-modal Neuroimaging: Finding Structural Changes of the Ageing Brain: Difference relevance maps of T1-FLAIR [Dataset]. http://identifiers.org/neurovault.image:783828niftiAvailable download formatsUnique identifierhttps://identifiers.org/neurovault.image:783828Dataset updatedJul 27, 2022LicenseCC0 1.0 Universal Public Domain Dedicationhttps://creativecommons.org/publicdomain/zero/1.0/

License information was derived automaticallyDescriptionAfter subtracting relevance maps per participant in the two MRI sequences (T1-FLAIR) from each other, 1-sample t-tests were computed to find areas which showed significant differences between the modalities.

Collection description

Brain-age (BA) estimates based on deep learning are increasingly used as neuroimaging biomarker for brain health; however, the underlying neural features have remained unclear. We combined ensembles of convolutional neural networks with Layer-wise Relevance Propagation (LRP) to detect which brain features contribute to BA. Trained on magnetic resonance imaging (MRI) data of a population-based study (n=2637, 18-82 years), our models estimated age accurately based on single and multiple modalities, regionally restricted and whole-brain images (mean absolute errors 3.37-3.86 years). We find that BA estimates capture aging at both small and large-scale changes, revealing gross enlargements of ventricles and subarachnoid spaces, as well as white matter lesions, and atrophies that appear throughout the brain. Divergence from expected aging reflected cardiovascular risk factors and accelerated aging was more pronounced in the frontal lobe. Applying LRP, our study demonstrates how superior deep learning models detect brain-aging in healthy and at-risk individuals throughout adulthood.

This collection contains statistical maps from the analysis of the above mentioned LRP relevance maps.

Subject species

homo sapiens

Modality

Structural MRI

Analysis level

group

Cognitive paradigm (task)

rest eyes open

Map type

T

- M

BrainMetShare

- stanfordaimi.azurewebsites.net

- aimi.stanford.edu

Updated Jan 25, 2021+ more versions Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteMicrosoft Research (2021). BrainMetShare [Dataset]. https://stanfordaimi.azurewebsites.net/datasets/43070092-2610-4837-9b88-838148d937efDataset updatedJan 25, 2021Dataset authored and provided byMicrosoft ResearchLicense

CiteMicrosoft Research (2021). BrainMetShare [Dataset]. https://stanfordaimi.azurewebsites.net/datasets/43070092-2610-4837-9b88-838148d937efDataset updatedJan 25, 2021Dataset authored and provided byMicrosoft ResearchLicensehttps://aimistanford-web-api.azurewebsites.net/licenses/f1f352a6-243f-4905-8e00-389edbca9e83/viewhttps://aimistanford-web-api.azurewebsites.net/licenses/f1f352a6-243f-4905-8e00-389edbca9e83/view

DescriptionA brain MRI dataset to develop and test improved methods for detection and segmentation of brain metastases. The dataset includes 156 whole brain MRI studies, including high-resolution, multi-modal pre- and post-contrast sequences in patients with at least 1 brain metastasis accompanied by ground-truth segmentations by radiologists.

About 2% of all patients with a primary neoplasm will be diagnosed with brain metastases at the time of their initial diagnosis. As we are getting better at controlling primary cancers, even more patients eventually present with such lesions. Given that brain metastases are often quite treatable with surgery or stereotactic radiosurgery, accurate segmentation of brain metastases is a common job for radiologists. Having algorithms to help detect and localize brain metastasis could relieve radiologists from this tedious but crucial task. Given the success of recent AI techniques on other segmentation tasks, we have put together this gold-standard, labeled MRI dataset to allow for the development and testing of new techniques in these patients with the hopes of spurring research in this area.

This is a dataset of 156 pre- and post-contrast whole brain MRI studies in patients with at least 1 cerebral metastasis. Mean patient age was 63±12 years (range: 29–92 years). Primary malignancies included lung (n = 99), breast (n = 33), melanoma (n = 7), genitourinary (n = 7), gastrointestinal (n = 5), and miscellaneous cancers (n = 5). 64 (41%) had 1–3 metastases, 47 (30%) had 4–10 metastases, and 45 (29%) had >10 metastases. Lesion sizes varied from 2 mm to over 4 cm and were scattered in every region of the brain parenchyma, i.e., the supratentorial and infratentorial regions, as well as the cortical and subcortical structures. It includes 4 different 3D sequences (T1 spin-echo pre-contrast, T1 spin-echo post-contrast, T1 gradient-echo post (using an IR-prepped FSPGR sequence), T2 FLAIR post) in the axial plane, co-registered to each other, resampled to 256 x 256 pixels. Standard dose (0.1 mmol/kg) gadolinium contrast agents were used for all cases. All the images have been skull-stripped by using the Brain Extraction Tool (BET) (Smith SM. Fast robust automated brain extraction. Hum Brain Map. 2002;17:143–155). The brain masks were generated from the precontrast T1-weighted 3D CUBE imaging series using the nordicICE software package (NordicNeuroLab, Bergen, Norway) and propagated to the other sequences.

For 105 cases, we include radiologist-drawn segmentations of the metastatic lesions, stored in folder ‘mets_stanford_release_train’. The segmentations were based on the T1 gradient-echo post-contrast images. The remaining 51 cases are unlabeled and stored in ‘mets_stanford_release_test’. There are 5 folders for each subject in the training group – folder ‘0’ contains T1 gradient-echo post images; folder ‘1’ contains T1 spin-echo pre images; folder ‘2’ contains T1 spin-echo post images; folder ‘3’ contains T2 FLAIR post images; folder ‘seg’ contains a binary mask of the segmented metastases (0, 255). There are 4 folders for each subject in the testing group, which are labelled identically, except for the absence of folder ‘seg’.

More detailed information on this dataset and the Stanford group’s initial performance on this data set can be found in Grøvik et al., Deep Learning Enables Automatic Detection and Segmentation of Brain Metastases on Multisequence MRI, JMRI 2019; 51(1):175-182.

We would like to thank the team involved with labeling and preparing the data and for checking it for potential PHI: Darvin Yi, Endre Grovik, Elizabeth Tong, Michael Iv, Daniel Rubin, Greg Zaharchuk, and Ghiam Yamin, and the Division of Neuroimaging at Stanford for supporting this project.

Grøvik et al., Deep Learning Enables Automatic Detection and Segmentation of Brain Metastases on Multisequence MRI, JMRI 2019; 51(1):175-182 also available on ArXiv (https://arxiv.org/abs/1903.07988).

Additional file 1 of Detecting haplotype-specific transcript variation in...

- figshare.com

txtUpdated Aug 18, 2024 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteAlison D. Tang; Colette Felton; Eva Hrabeta-Robinson; Roger Volden; Christopher Vollmers; Angela N. Brooks (2024). Additional file 1 of Detecting haplotype-specific transcript variation in long reads with FLAIR2 [Dataset]. http://doi.org/10.6084/m9.figshare.26157242.v1txtAvailable download formatsUnique identifierhttps://doi.org/10.6084/m9.figshare.26157242.v1Dataset updatedAug 18, 2024AuthorsAlison D. Tang; Colette Felton; Eva Hrabeta-Robinson; Roger Volden; Christopher Vollmers; Angela N. BrooksLicense

CiteAlison D. Tang; Colette Felton; Eva Hrabeta-Robinson; Roger Volden; Christopher Vollmers; Angela N. Brooks (2024). Additional file 1 of Detecting haplotype-specific transcript variation in long reads with FLAIR2 [Dataset]. http://doi.org/10.6084/m9.figshare.26157242.v1txtAvailable download formatsUnique identifierhttps://doi.org/10.6084/m9.figshare.26157242.v1Dataset updatedAug 18, 2024AuthorsAlison D. Tang; Colette Felton; Eva Hrabeta-Robinson; Roger Volden; Christopher Vollmers; Angela N. BrooksLicenseAttribution 4.0 (CC BY 4.0)https://creativecommons.org/licenses/by/4.0/

License information was derived automaticallyDescriptionAdditional file 1. HSTs identified in hybrid Castaneus x Mouse 129. Columns are as follows: 1) chromosome; 2) position; 3) reference allele; 4) alternate allele; 5) FLAIR isoform containing ENSMUSG gene id; 6) p-value of Fisher’s test; the number of reads supporting the 7) reference or 8) alternate allele in the transcript in column 5; the number of reads supporting the 9) reference or 10) alternate allele in all other transcripts of the same gene.

- R

Flare Visual Images Dataset

- universe.roboflow.com

zipUpdated Apr 8, 2025 Share

Share Facebook

Facebook Twitter

Twitter EmailClick to copy linkLink copied

EmailClick to copy linkLink copied CiteTOUHID (2025). Flare Visual Images Dataset [Dataset]. https://universe.roboflow.com/touhid/flare-visual-images/model/1zipAvailable download formatsDataset updatedApr 8, 2025Dataset authored and provided byTOUHIDLicense

CiteTOUHID (2025). Flare Visual Images Dataset [Dataset]. https://universe.roboflow.com/touhid/flare-visual-images/model/1zipAvailable download formatsDataset updatedApr 8, 2025Dataset authored and provided byTOUHIDLicenseAttribution 4.0 (CC BY 4.0)https://creativecommons.org/licenses/by/4.0/

License information was derived automaticallyVariables measuredC U Bounding BoxesDescriptionFLARE VISUAL IMAGES

## Overview FLARE VISUAL IMAGES is a dataset for object detection tasks - it contains C U annotations for 364 images. ## Getting Started You can download this dataset for use within your own projects, or fork it into a workspace on Roboflow to create your own model. ## License This dataset is available under the [CC BY 4.0 license](https://creativecommons.org/licenses/CC BY 4.0).

Facebook

Facebook Twitter

TwitterFLAIR-1-2

IGNF/FLAIR-1-2

French Land Cover from Aerospace Imagery

https://choosealicense.com/licenses/etalab-2.0/https://choosealicense.com/licenses/etalab-2.0/

Dataset Card for FLAIR land-cover semantic segmentation

Context & Data

The hereby FLAIR (#1 and #2) dataset is sampled countrywide and is composed of over 20 billion annotated pixels of very high resolution aerial imagery at 0.2 m spatial resolution, acquired over three years and different months (spatio-temporal domains). Aerial imagery patches consist of 5 channels (RVB-Near Infrared-Elevation) and have corresponding annotation (with 19 semantic classes or 13 for the… See the full description on the dataset page: https://huggingface.co/datasets/IGNF/FLAIR-1-2.